5 features of Obsidian Copilot that You Can Use With AI RIGHT NOW!

AI tools are popping up in almost every app these days… but the real question for me is, what’s the best way to actually use AI with Obsidian?

I’m breaking down five ways I use AI every day in Obsidian to speed things up and get more value out of my notes.

I’ll be honest—I avoided adding AI to Obsidian for a while, the idea of sending my data to external service did not appeal to me. But I found a new AI plug-in for obsidian that allows me to decide if my data get sent to a external server or if I can use a local language model instead.

And I want to share one workflow I never expected AI to completely change… and I’ll show you that a little later in the video.

Lets get started.

Tagging using custom prompts

I’m currently using the properties feature to add tags to my notes. Tags make it easier to quickly search and find related content, though adding them manually can sometimes take time.

To speed things up, I use Copilot to generate a list of the top 10 tags I could apply. I then pick the most relevant ones and add them to my properties. This helps me organize my notes more effectively and makes future searches more useful.

Over time, this process has made my tags much more valuable for search queries, and it has also helped me keep them better organized.

Let’s see it in action.

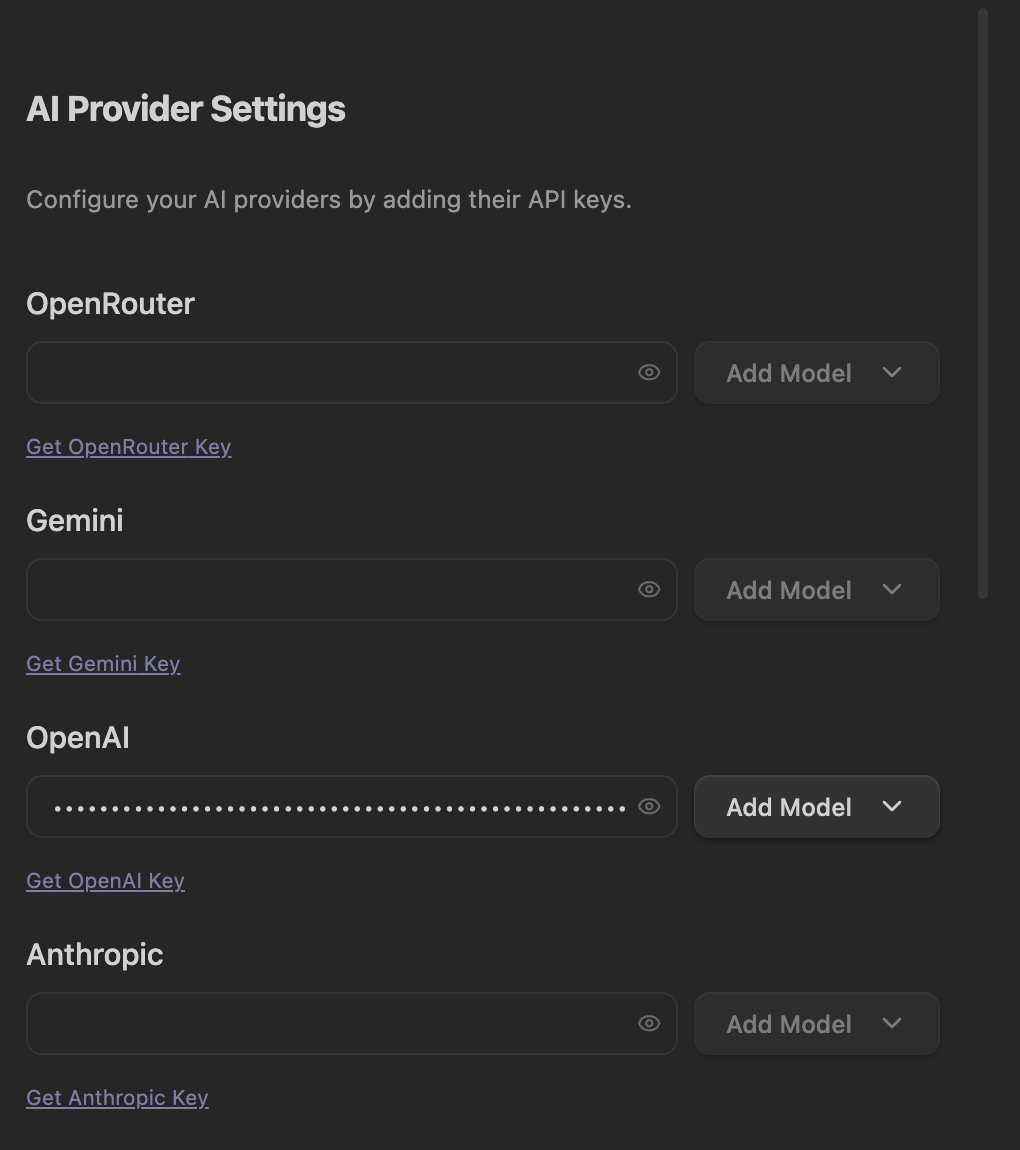

After installing Copilot from the community store, you can choose whether to add an API key from your AI provider. I originally tested this with OpenAI by setting up my API keys.

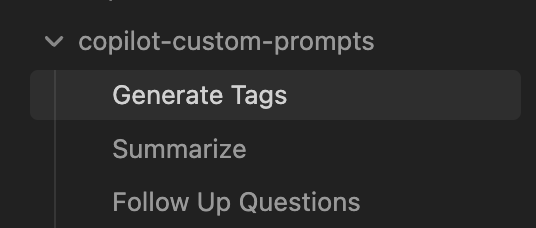

Next I created a new note in the copilot-custom-prompts

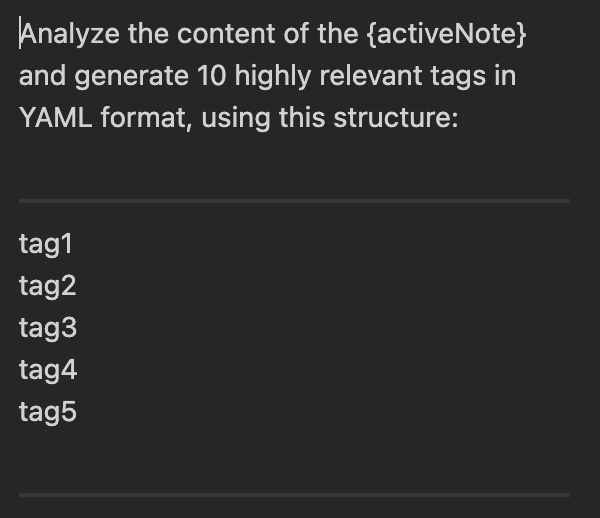

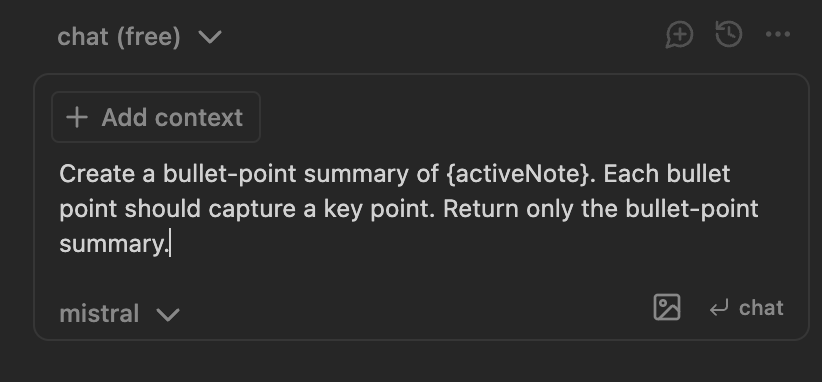

I added a prompt to generate a list of tags based on the active note

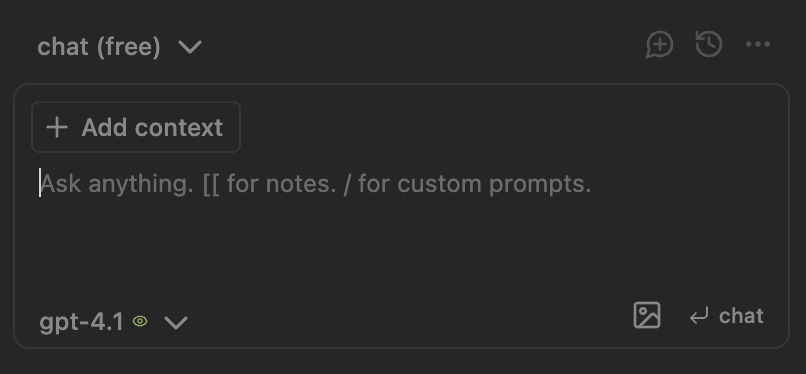

You can access this custom prompt using the forward command in the chatbox of copilot.

Based on what it generates I add the best 5 tags to my note.

Querying offline

Copilot allows me to connect to Ollama, which gives access to several different large language models. I’m currently using Mistral and Gemma, depending on what I need to get done.

Having a local large language model makes me feel safer because everything stays on my machine. My notes never leave my device, so I don’t risk sensitive information being uploaded to a service.

I also like that I can query, summarize, and analyze my notes without needing an internet connection. This is especially useful when I’m traveling, working remotely, or in areas with poor connectivity.

Another big benefit is cost. Running the model locally avoids recurring API fees. On my M2 MacBook Air, the performance is a bit slower compared to using the ChatGPT API, but the quality of responses is very similar—so I don’t mind waiting.

Let’s set up a local LLM for Copilot.

The way I use a local LLM is with Ollama.

Once you’ve installed Ollama, you just need to download and run your chosen model.

In my case I have been using Mistral 7b.

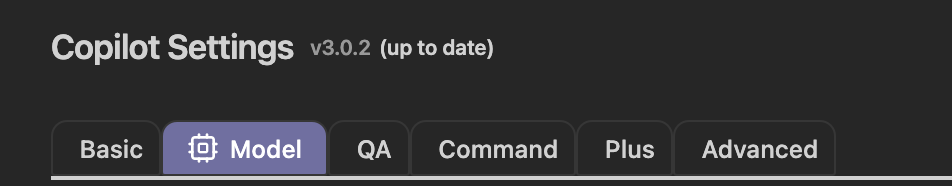

In Copilot setting I set up a custom model in the Model tab

I added mistral as the model name and Ollama as the provider

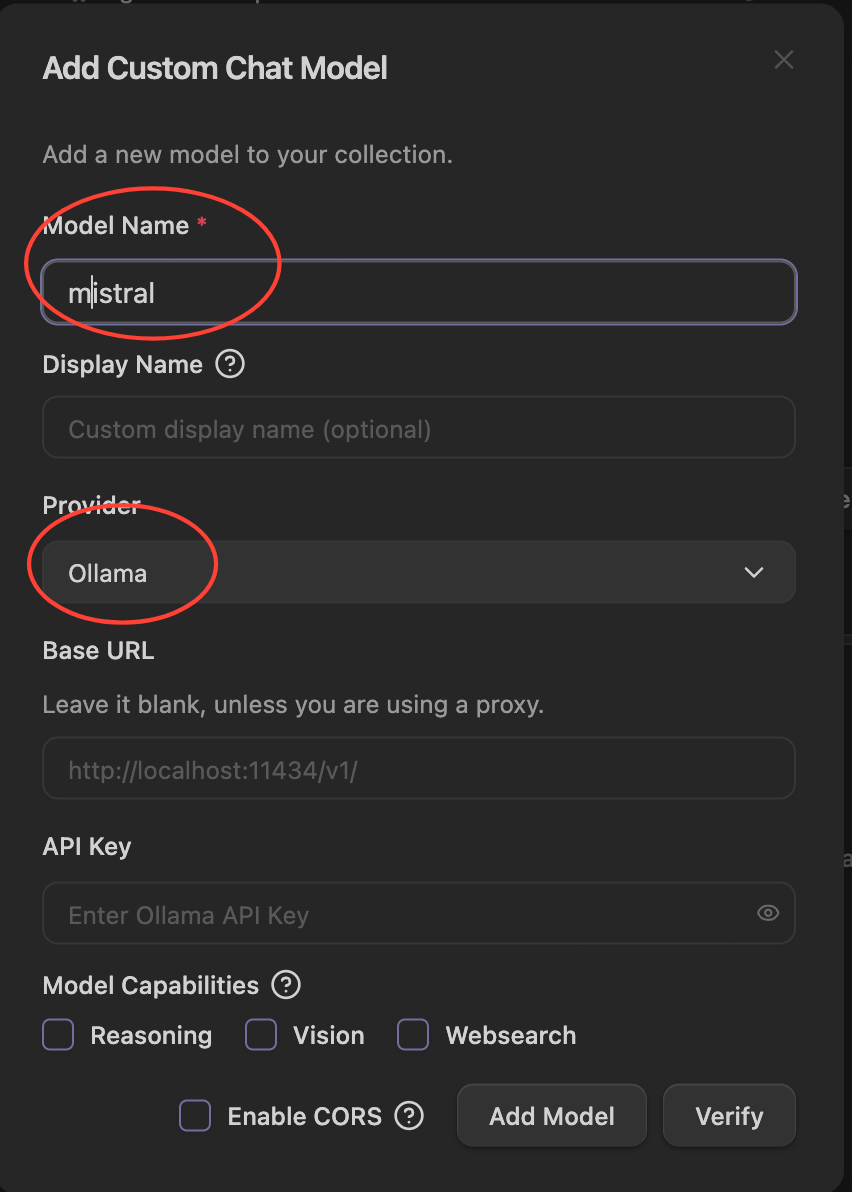

Once setup you can use the switch between LLMs in the chat box LLM drop down.

Summaries using different LLMs

One of the most useful features I’ve been using is summarization for notes with a large amount of text. This often happens when I import transcripts from YouTube videos I’m interested in.

I use the standard Copilot query to generate a bullet-point breakdown of a note, which I then add to the top of my notes. This saves me time because I can quickly scan the summary and only read further if a bullet point covers something I need.

Another feature I like is the ability to use different LLMs depending on my needs.

When I compared a local LLM such as Mistral with an external one like ChatGPT, I found that the bullet-point summaries were almost identical.

I’ve also been experimenting with asking the summaries to focus on specific subjects I’m interested in. This helps me skip irrelevant details and focus on the topics that matter to me, making my note-taking and learning more personalized.

Generate followup question

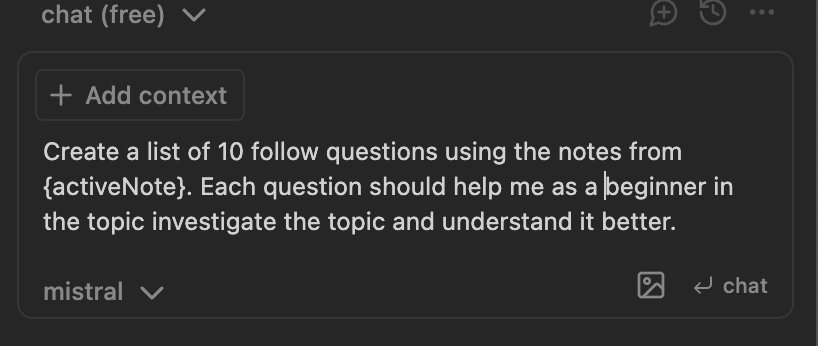

As part of my note-taking process, I always think of follow-up questions to investigate later, and until now I’ve been doing this manually.

Now, I ask Copilot to generate these questions for me. This adds clarity to my notes and gives me the opportunity to gain a deeper understanding of the topic—often in ways I might not have considered.

The questions also provide me with clear next steps, helping me stay focused without falling into rabbit holes or going in circles.

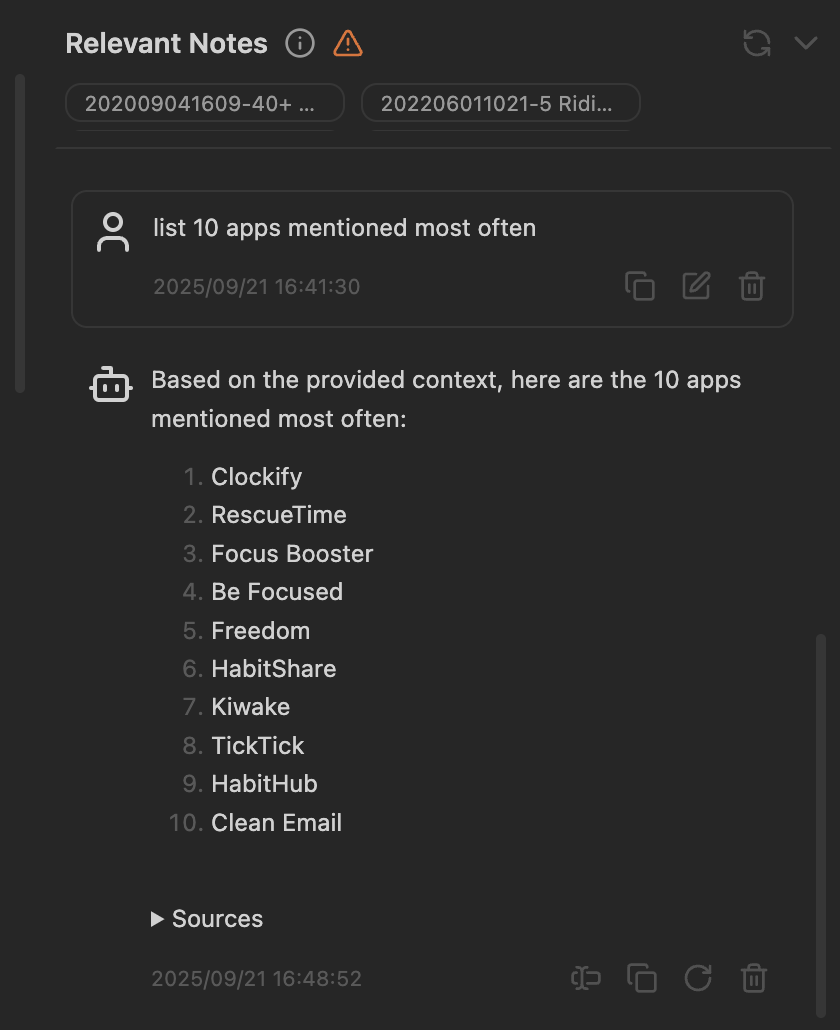

Vault QA

One of the biggest ways Copilot has improved my workflow is by letting me ask questions and find answers directly from my notes—notes I’ve built up over many years of using Obsidian.

Instead of manually searching through pages of text, the AI can quickly pinpoint the exact section I need or summarize the key points.

I also feel that the AI works with my notes. The answers are tailored to my content’s wording and priorities, rather than generic results from the internet.

Another benefit is how the AI links ideas across different notes, highlighting common concepts and surfacing insights I might have otherwise missed.

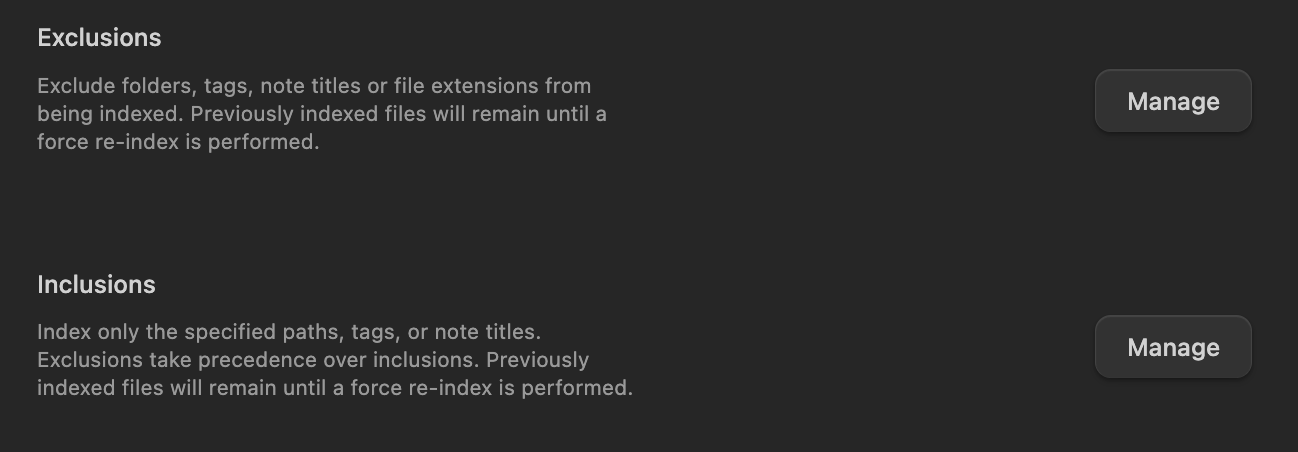

Copilot also provides options to customize the Vault Q&A feature. For example, you can set exclusions and inclusions to limit which folders are searched, giving you more control over where the answers come from.

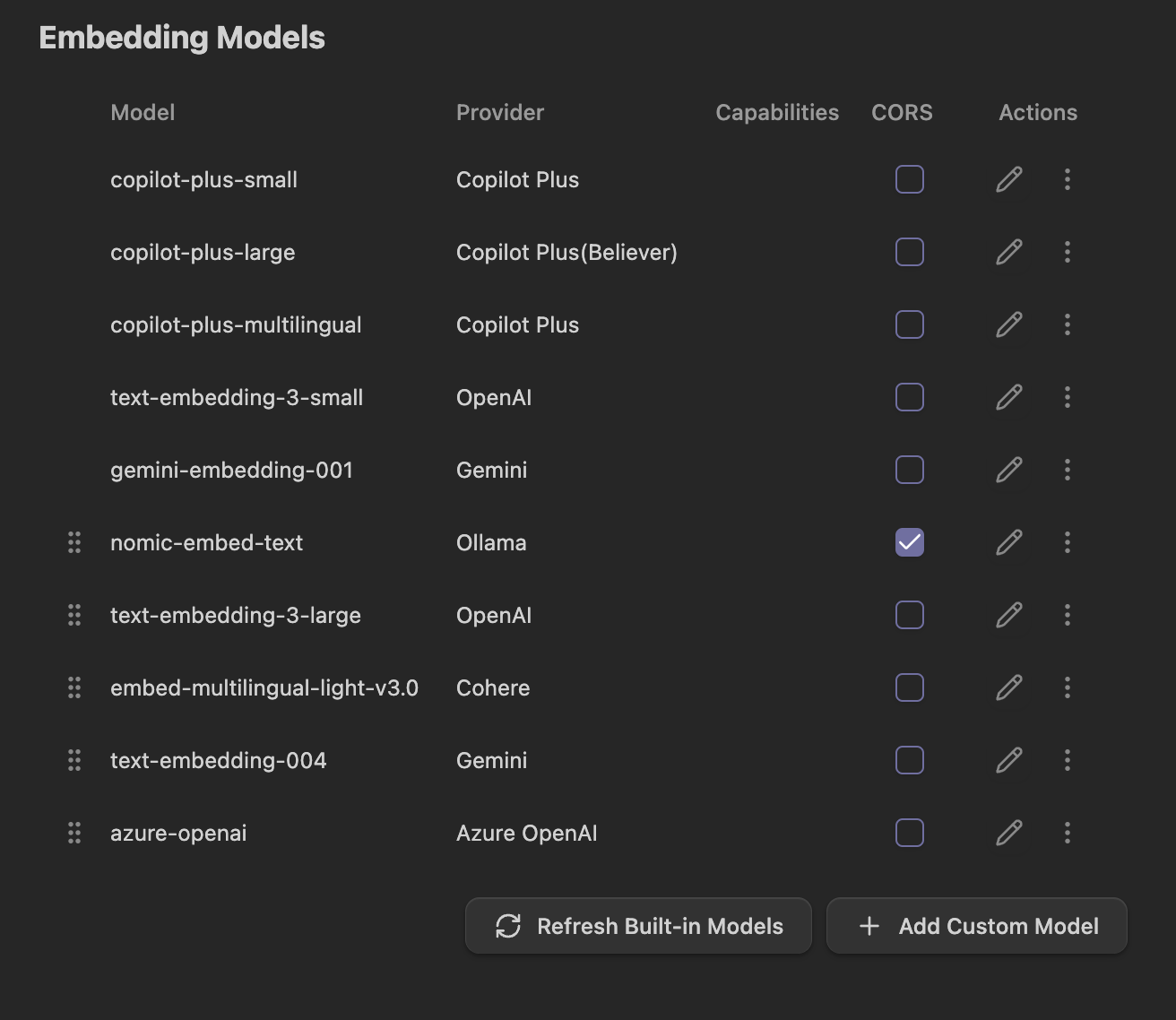

I’ve had the most success using the nomic-embed-text option via Ollama to ensure my notes are processed locally. It takes a bit of time, but I’ve been happy with the results.

The setup is similar to how I configured Mistral, which I explained earlier, but this one is done in the embedding model section.

I need to switch chat to vault QA in the chat-box and ask the question

Copilot generates its answer and it provides sources with links to my notes it has used for my to refer to.

Overall, the Copilot plugin is very useful.

I plan to use it even more as I integrate AI into Obsidian while still maintaining the integrity of my vault.

Copilot also offers advanced paid options, but for now, I find that the free version gives me everything I need.

What about you? Are you planning to use AI in your Obsidian vault?

Let me know in the comments below—until next time, goodbye!